Member-only story

Use sklearn’s KNN imputer to impute missing values on a dataframe

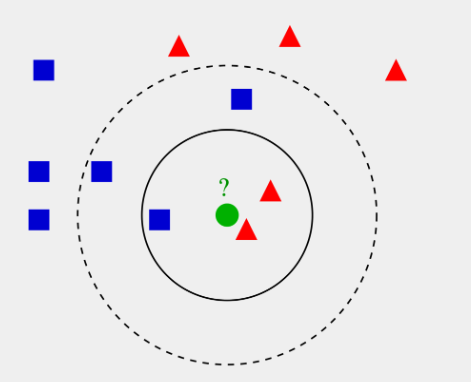

The K Nearest Neighbours algorithm is a simple supervised machine learning algorithm that can be used to solve both classification and regression problems. KNN works by finding the distances between a query and all the examples in the data, selecting the specified number examples (K) closest to the query, then votes for the most frequent label (in the case of classification) or averages the labels (in the case of regression).

Sklearn’s KNNImputer is a variation of the KNN algorithm. This function provides imputation by filling null values in a dataset using the K Nearest Neighbours approach. By default, a euclidean distance metric that supports missing values, nan_euclidean_distances, is used to find the nearest neighbours. Each missing feature is imputed using values from n_neighbors nearest neighbours that have a value for the feature. The feature of the neighbours are averaged uniformly or weighted by distance to each neighbour. If a sample has more than one feature missing, the neighbours for that sample can be different depending on the particular feature being imputed. When the number of available neighbours is less than n_neighbors and there are no defined distances to the training set, the training set average for that feature is used during imputation. If there is at least one neighbour with a defined distance, the weighted…